|

David Graeber was right about BS jobs, says Max Murphy. Basically, our economy is bifurcating into two kinds of jobs: "essential" jobs that, despite being "essential", are lowly paid and unappreciated, and "BS" (I'm just going to abbreviate) jobs that are highly paid but accomplish nothing useful for anybody. The surprise, perhaps, is that these BS jobs, despite being well paid, are genuinely soul-crushing.

My question, though, is how much of this is due to technological advancement, and will the continued advancement of technology (AI etc) increase the ratio of BS jobs to essential jobs further in favor of the BS jobs? |

|

|

"Who would really win a civil war?", ponders "Monsieur Z". Before I reveal what he says, let me preface it by saying in my mind, I always figured if there was a civil war in this country between the liberal and conservative sides (which sadly seems increasingly likely over the years), the liberal side would win. The "logic" behind this prediction, such as it is, is simply that all the economic growth for the last several decades has been concentrated in the tech companies and major cities of the liberal side, and economic growth ultimately determines the winner. That's not to say that a civil war couldn't be extremely bloody and worth avoiding because of the loss of life it would cause.

Ok, having said that, I never encountered this YouTube channel before, and I get the impression this guy is a conservative, since he seems to sympathize more with the conservative side. If you're familiar with this channel feel free to chime in. (Hopefully sharing a link to this channel won't get you in trouble -- I think YouTube keeps track of who watches disapproved channels.) It seems he's some sort of military historian. Anyway, he predicts a win for the liberal side. The greatest advantage the conservative side has, supposedly, is they control the food supply, but at a practical level, they cannot cut off the food supply to liberals because that would also cut off the food supply to each other in the process. More generally, the conservative side is too fractured and distrustful to organize into a combat force that could successfully take on the US military, even as a guerrilla combat force, and the military will, he predicts, be successful at purging conservative sympathizers from their ranks. |

|

|

"Humane AI Pin review: A $700 gamble," says David Pierce (Vergecast). The hardware is pretty good but the AI slow enough and error-prone enough to be frustrating.

I guess the dream of the "linguistic user interface" is not here yet. |

|

|

"AI could actually help rebuild the middle class," says David Autor.

"Artificial intelligence can enable a larger set of workers equipped with necessary foundational training to perform higher-stakes decision-making tasks currently arrogated to elite experts, such as doctors, lawyers, software engineers and college professors. In essence, AI -- used well -- can assist with restoring the middle-skill, middle-class heart of the US labor market that has been hollowed out by automation and globalization."

"Prior to the Industrial Revolution, goods were handmade by skilled artisans: wagon wheels by wheelwrights; clothing by tailors; shoes by cobblers; timepieces by clockmakers; firearms by blacksmiths."

"Unlike the artisans who preceded them, however, expert judgment was not necessarily needed -- or even tolerated -- among the 'mass expert' workers populating offices and assembly lines."

"As a result, the narrow procedural content of mass expert work, with its requirement that workers follow rules but exercise little discretion, was perhaps uniquely vulnerable to technological displacement in the era that followed."

"Stemming from the innovations pioneered during World War II, the Computer Era (AKA the Information Age) ultimately extinguished much of the demand for mass expertise that the Industrial Revolution had fostered."

"Because many high-paid jobs are intensive in non-routine tasks, Polanyi's Paradox proved a major constraint on what work traditional computers could do. Managers, professionals and technical workers are regularly called upon to exercise judgment (not rules) on one-off, high-stakes cases."

Polanyi's Paradox, named for Michael Polanyi who observed in 1966, "We can know more than we can tell," is the idea that "non-routine" tasks involve "tacit knowledge" that can't be written out as procedures -- and hence coded into a computer program. But AI systems don't have to be coded explicitly and can learn this "tacit knowledge" like humans.

"Pre-AI, computing's core capability was its faultless and nearly costless execution of routine, procedural tasks."

"AI's capacity to depart from script, to improvise based on training and experience, enables it to engage in expert judgment -- a capability that, until now, has fallen within the province of elite experts."

Commentary: I feel like I had to make the mental switch from expecting AI to automate "routine" work to "mental" work, i.e. what matters is mental-vs-physical, not creative-vs-routine. Now we're right back to talking about the creative-vs-routine distinction. |

|

|

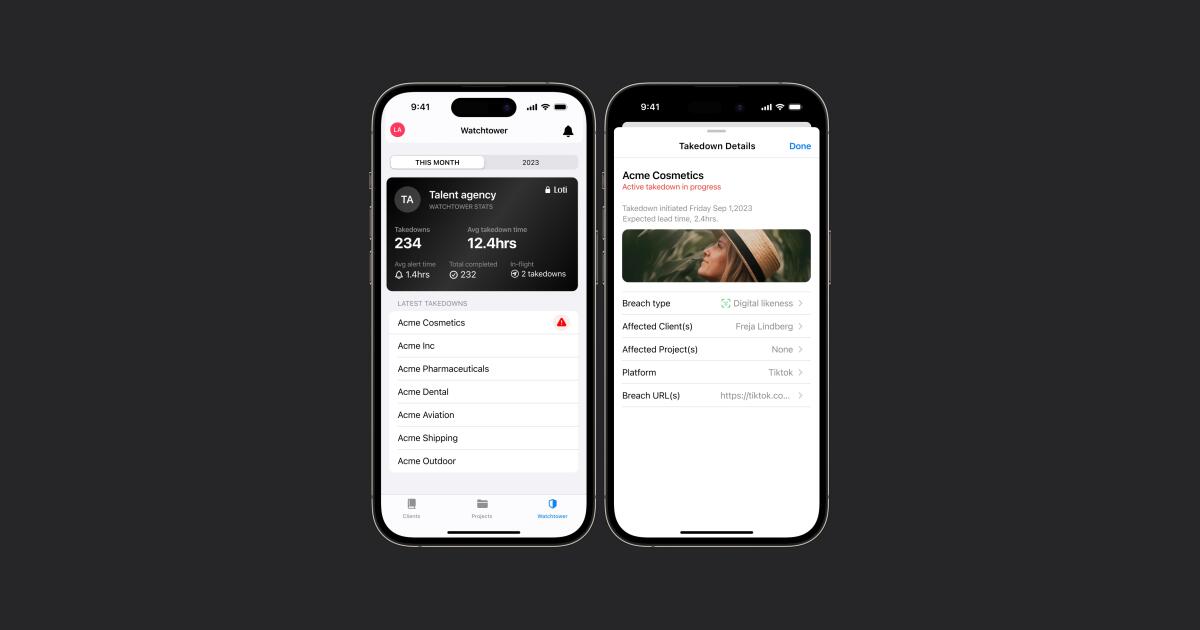

"The rise of generative AI and 'deepfakes' -- or videos and pictures that use a person's image in a false way -- has led to the wide proliferation of unauthorized clips that can damage celebrities' brands and businesses."

"Talent agency WME has inked a partnership with Loti, a Seattle-based firm that specializes in software used to flag unauthorized content posted on the internet that includes clients' likenesses. The company, which has 25 employees, then quickly sends requests to online platforms to have those infringing photos and videos removed."

This company Loti has a product called "Watchtower", which watches for your likeness online.

"Loti scans over 100M images and videos per day looking for abuse or breaches of your content or likeness."

"Loti provides DMCA takedowns when it finds content that's been shared without consent."

They also have a license management product called "Connect", and a "fake news protection" program called "Certify".

"Place an unobtrusive mark on your content to let your fans know it's really you."

"Let your fans verify your content by inspecting where it came from and who really sent it."

They don't say anything about how their technology works. |

|

|

| "Sonauto: Create hit songs with AI". Another AI music generator. I listened to Blue Scooby Doo ft. AI Beatles and Thanks Hacker News, done in the style of a country song. Pretty impressive. Give a listen. Check out the "Top of Week" and "Top of All Time" categories. I haven't had time to actually try creating a song, but if you do, let me know how it goes. |

|

/static.texastribune.org/media/files/845135797d4460298c4c03f52b0fc261/0913%20Odessa%20School%20Tours%20EH%2021.jpg) |

"Texas will use computers to grade written answers on this year's STAAR tests."

STAAR stands for "State of Texas Assessments of Academic Readiness" and is a standardized test given to elementary through high school students. It replaced an earlier test starting in 2007.

"The Texas Education Agency is rolling out an 'automated scoring engine' for open-ended questions on the State of Texas Assessment of Academic Readiness for reading, writing, science and social studies. The technology, which uses natural language processing, a building block of artificial intelligence chatbots such as GPT-4, will save the state agency about $15 million to 20 million per year that it would otherwise have spent on hiring human scorers through a third-party contractor."

"The change comes after the STAAR test, which measures students' understanding of state-mandated core curriculum, was redesigned in 2023. The test now includes fewer multiple choice questions and more open-ended questions -- known as constructed response items." |

|

|

| The Daily Show with Jon Stewart did a segment on AI and jobs. Basically, we're all going to get helpful assistants which will make us more productive, so it's going to be great, except, more productive means fewer humans employed, but don't worry, that's just the 'human' point of view. (First 8 minutes of this video.) |

|

|

Udio generates AI-generated music. I went through the staff picks. I was impressed that it rendered "acoustic" music well with lyrics -- and the singing seemed actually good and the lyrics made sense. Does genres like jazz & country.

They don't say anything about how the system works. They say the program is free during the beta program. |

|

|

"Majority of ASEAN people favor China over US, survey finds."

ASEAN stands for Association of Southeast Asian Nations, and consists of Indonesia, the Philippines, Vietnam, Thailand, Myanmar/Burma, Malaysia, Cambodia, Laos, Brunei, and Singapore.

"According to the State of Southeast Asia 2024 survey, compiled by the ISEAS-Yusof Ishak Institute, 50.5% of respondents opted for China and 49.5% preferred the US if ASEAN had to pick sides -- the first time Beijing edged past Washington since the annual survey started asking the question in 2020."

Countries that preferred the US: the Philippines (83.3%), Vietnam (79%), Singapore, Myanmar/Burma, and Cambodia. Countries that preferred China: Brunei, Laos (70.6%), Indonesia (73.2%), and Malaysia (75.1%). |

|

|

In 1973, there was a total solar eclipse that lasted 74 minutes. Well, sort of -- it was done with a clever trick. Viewed from any spot on Earth, the eclipse lasted a little over 7 minutes, but viewed from a supersonic aircraft flying in the direction of the eclipse, the duration could be stretched out.

The aircraft was a modified Concorde, and it wasn't *quite* able to keep up with the speed the moon's shadow moved over the face of the Earth. So it couldn't stretch out the length of the eclipse indefinitely. But it was able to stretch it out quite a lot.

This got me curious how fast the moon's shadow moves. For the eclipse we just had, I measured the distance from where it first lands on the North American continent, just south of Mazatlan, Mexico, to where it leaves the North American continent, near Rivière-au-Portage, New Brunswick, Canada, as 4,530 km (metric system!), or 2,815 miles. The time it takes to cross that distance is 1 hour, 27 minutes, 33 seconds. Doing the arithmetic that results in a speed of 3,105 km per hour, or 1,929 miles per hour. Translating that into a mach number we get mach 2.51. The Concorde's maximum speed was mach 2.04. The SR-71 has a maximum speed of mach 3.2, so an SR-71 could in fact keep up with the moon's shadow.

The other question is why eclipses near the equator can last more than 7 minutes while ours are much shorter up here in North America, at around 4 and a half minutes? Apparently the answer to this question is very similar to the supersonic airplane question. The moon's shadow moves from west to east during an eclipse, and the earth itself rotates from west to east as well, in the same direction -- this is why the sun rises in the east. So, the closer you are to the equator during an eclipse, the more the rotation of the earth itself mimics the movement of the supersonic airplane and elongates the eclipse. |

|

|

This Chinese website ranks large language models. Qwen 1.5 made by Alibaba is number 2 on the list. Number 5 on the list is Chinese-Mixtral, a Chinese-language version of the French-made Mixtral.

Going in order: 1. DBRX made by DataBricks, 2. Qwen 1.5 made by Alibaba, 3. Jamba made by somebody, 4. stabilityai/stable-code-3b from Stability AI (a company that has been unstable lately), 5. Chinese-Mixtral, 6. devika, 7. Evolutionary Model Merge, 8. SDXS by somebody, 9. Champ by somebody, 10. AniPortrait by somebody. |

|

|

Why this developer is no longer using Copilot. He feels his programming skills atrophy. He writes code by pausing to wait for Copilot to write code, and doesn't enjoy programming that way. The AI-generated code is often wrong or out-of-date and has to be fixed. Using copilot is a privacy issue because your code is shared with Copilot.

I thought this was quite interesting. I tried Copilot in VSCode and I figured I wasn't using it much because I'm a vim user. So I tracked down the Neovim plug-in & got it working in vim, but still found I don't use it. Now I've come to feel it's great for certain use cases and bad for others. Where it's great is writing "boilerplate" code for using a public API. You just write a comment describing what you want to do and the beginning of the function, and Copilot spits out practically all the rest of the code for you function -- no tedious hours studying the documentation from the API provider.

But that's not the use case I actually engage in in real life. Most of what I do is either making a new UI, or porting code from PHP to Go. For the new UI, AI has been helpful -- I can take a screenshot, input it to ChatGPT, and ask it how to improve the AI. (I'm going to be trying this with Google's Gemini soon but I haven't tried it yet.) When it makes suggestions, I can ask it what HTML+CSS is needed to implement those suggestions. I've found it gets better and better for about 6 iterations. But you notice, Copilot isn't part of the loop. I'm jumping into dozens of files and making small changes, and that's a use case where Copilot just isn't helpful.

For porting code from PHP to Go, I modified a full-fledged PHP parser to transpile code to Go, and this has been critical because it's important that certain things, especially strings, get ported over *exactly* -- no room for errors. So this system parses PHP strings using PHP's parsing rules, and outputs Go strings using Go's parsing rules, and is always 100% right. Copilot isn't part of the loop and doesn't help.

Another place I've found AI incredibly useful is debugging problems where I have no clue what the problem might be. This goes back to using other people's large systems such as the public APIs mentioned earlier. Every now and then you get cryptic error messages or some other bizarre malfunction, and endless Google searching doesn't help. I can go to ChatGPT, Gemini, Claude, Perplexity, DeepSeek (and others, but those are the main ones I've been using) and say hey, I'm getting this cryptic error message or this weird behavior, and it can give you a nice list of things you might try. That can get you unstuck when you'd otherwise be very stuck.

It's kinda funny because, obviously I'm an avid follower of what's going on in AI, and happy to try AI tools, and I constantly run across other developers who say "Copilot has made me twice as productive!" or "Copilot has made me five times as productive!" or somesuch. I've wondered if there's something wrong with me because I haven't experienced those results at all. But AI has been helpful in *other* areas nobody ever seems to talk about. |

|

|

The XZ attack has taken the world of cybersecurity by storm. This video provides a concise overview. (If you prefer text, there is a link to a text-based FAQ below.)

It begins with a clever "social engineering" attack, where two people play "good cop bad cop" to guilt-trip the maintainer of XZ. First I should probably mention that XZ Utils is a compression system used by Linux, in lots of places including package managers, build (code compilation) systems, and ssh, the "secure shell" system that enables people to log in to remote servers and run commands. (I myself use ssh dozens of times every day -- if you don't work with servers you wouldn't know, but this is how servers are managed all over the internet.) Getting back to the "social engineering" attack, the attackers successfully demoralized the project maintainer, who was an open source developer working in his spare time and not paid. He eventually gave up and made the "good cop" co-maintainer of the project.

The attack itself is pretty interesting, too. The attacker did not touch ssh, or at least not the code for ssh itself. He changed test code. And not in an obvious way -- he changed a "binary blob" that is opaque to people examining changes to the code to decide whether to accept the changes on their systems or not. The binary blog would get decompressed at build time, and it turned out inside it was a bash script (bash is another one of those Linux shells), and the bash script would get executed. The bash script would modify the ssh system in such a way that a certain public key would be replaced by a different one. The purpose of the original public key was to make sure only trusted people with the corresponding private key could update a running ssh system. With the attacker's key in place, the attacker can now change running ssh systems. Not only that, but because an ssh installation on a server runs with root privileges, because it has to because it has to be able to authenticate any user and then launch a command-line shell for that user with that user's privileges, the attacker becomes able to log in as root on any Linux server infected with the attack -- which could have eventually become more or less all of them had the attack not been discovered.

To me, this attack is interesting on so many levels:

1) It comes through the "supply chain" -- attacking open source at the point where contributors (often unpaid) submit their contributions.

2) It involves a "social engineering" attack on the supply chain, something it had never occurred to me was even possible before.

3) There was a long delay between the social engineering attack and the technical attack -- about 2 years. The attackers spent 2 years building trust to exploit later.

4) It attacks one piece of software (ssh) by attacking a completely different and apparently unrelated piece of software (XZ Utils).

5) It attacks the software not by attacking the code to the software directly, but to its *test* code.

6) It carries out the attack by running malicious code at *build* time instead of runtime. (The build of XZ Utils is part of the build of ssh.)

7) It attacks a cryptosystem by replacing a legitimate key with the attacker's key and getting the attacker's key "officially" distributed.

8) Had it been successful, the implications would have been *huge* -- it would have given the attacker access to practically every Linux server everywhere. (Well, every Linux server, pretty much, uses ssh but the attack initially targeted RedHat & Debian, so maybe it wouldn't have spread to everywhere.)

9) The attack was discovered accidentally, because it modified its target's *performance*, not any other aspect of its behavior.

I hadn't mentioned that last one yet, but yeah, the attack was discovered by a person who was doing performance benchmarks on a completely unrelated project (to do with the Postgres database), which just happened to include automated ssh logins as part of the testing system, and the ssh logins suddenly slowed down for no apparent reason. In trying to figure out what had gone wrong, he discovered the attack.

This has huge implications for the future for open source software and trust in all the projects and maintainers and regular software updates that are done on a daily basis all over the world. Some are predicting wholesale abandonment of the package distribution systems used currently throughout the Linux world. At the very least, everyone contributing to projects that become standard parts of Linux distributions is going to come under much greater scrutiny.

And in case you're wondering, no, nobody knows who the attackers were, at least as far as I know. And no, no one knows how many other attacks might exist "out there" in the Linux software supply chain. |

|

|

"Stubborn visionaries & pigheaded fools: How do you know when to stop, versus when to push through?"

"The puzzle:"

"Scenario 1 (S1)"

"At time (A) you start an AdWords campaign."

"At time (B) it's obviously not working; a waste of time and money."

"...But you keep trying, and by time (C), it's working! You did it!"

"Scenario 2 (S2)"

"At time (A) you start an AdWords campaign."

"At time (B) it's obviously not working; a waste of time and money."

"...But you keep trying, and by time (C), it's still not working, and you've wasted even more time and money. What a waste!"

"We've all experienced both scenarios, not just in AdWords but in life in general."

"S1 we call 'success through perseverance,' and you've heard this echoed in many platitudes".

"S2 we call 'failure through obstinance,' and you've heard this echoed in many platitudes."

"How do you know, at time (B), which scenario you're in?" "You cannot know. Not for AdWords, not for product design, not for the vision of your company and the market you hope to create around it, not for almost anything, big or small. It all looks the same at point B. Venture capitalists don't know either, though it's their job to know." |

|

|

Lumen Orbit is a new startup wants to "put hundreds of satellites in orbit, with the goal of processing data in space before it's downlinked to customers on Earth."

"Lumen's business plan calls for deploying about 300 satellites in very low Earth orbit, at an altitude of about 315 kilometers (195 miles). The first satellite would be a 60-kilogram (132-pound) demonstrator that's due for launch in May 2025."

"We started Lumen with the mission of launching a constellation of orbital data centers for in-space edge processing, Essentially, other satellites will send our constellation the raw data they collect. Using our on-board GPUs, we will run AI models of their choosing to extract insights, which we will then downlink for them. This will save bandwidth downlinking large amounts of raw data and associated cost and latency."

If you're wondering who wants this, there's a bunch of investors listed in the article, and it says they've raised $2.4 million to start with. |

|