|

"EyeEm, the Berlin-based photo-sharing community that exited last year to Spanish company Freepik after going bankrupt, is now licensing its users' photos to train AI models. Earlier this month, the company informed users via email that it was adding a new clause to its Terms & Conditions that would grant it the rights to upload users' content to 'train, develop, and improve software, algorithms, and machine-learning models.' Users were given 30 days to opt out by removing all their content from EyeEm's platform."

All your photos are belong to us. |

|

|

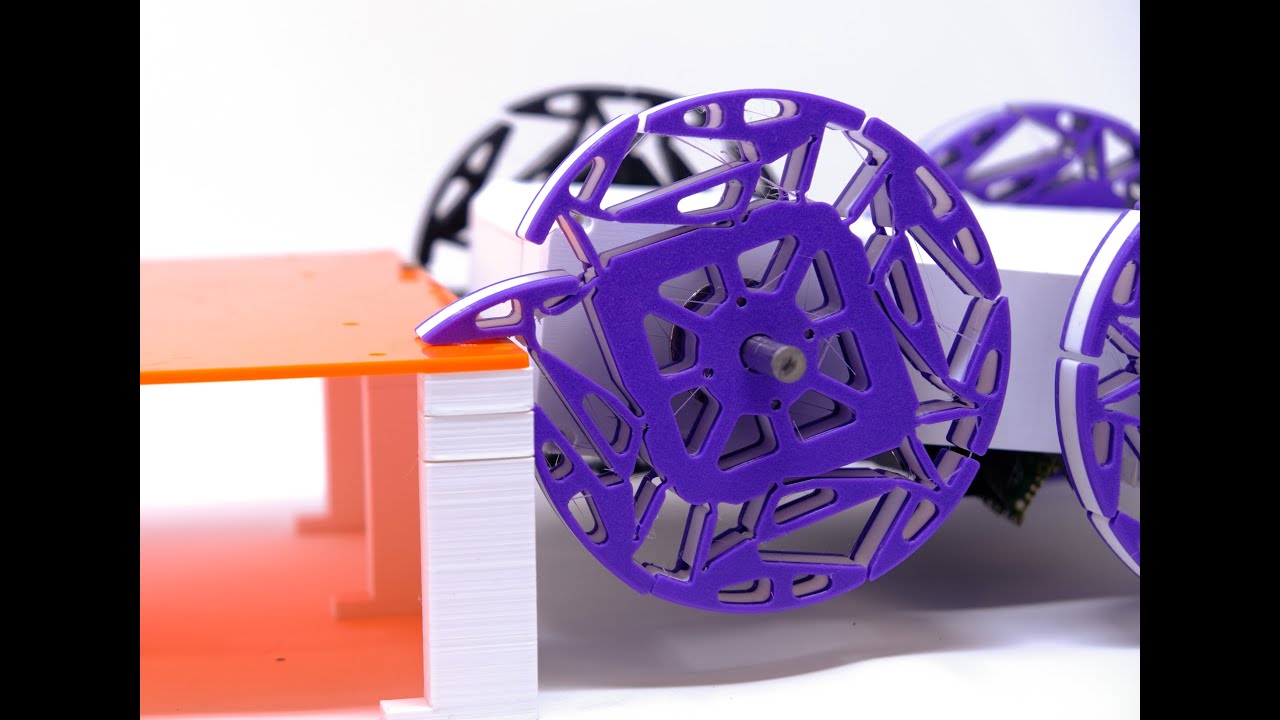

PaTS-Wheel is "a passively-transformable single-part wheel for mobile robot navigation".

The wheel has a "flexture" that consists of a pad, claw, and coupler, that works such that, on a smooth surface, it doesn't activate and the shape of the wheel is very close to a perfect wheel, but on a rough surface, the pad will get depressed and extend the claw, and the wheel will have claws that enable it to climb around. This is all done "passively" -- no motors or sensors are necessary. |

|

|

"NASA's Deep Space Optical Communications technology demonstration continues to break records."

267 megabits per second at 31 million kilometers, 25 megabits per second at 226 million kilometers (about 1.5x the Earth-Sun distance). Other than saying "near infrared" and done with lasers, they don't say what wavelengths are used or how exactly it's done. I guess they have sensitive photon-counting camera and laser transmitter plugged into a telescope on the spacecraft (called Psyche), and on Earth they have a receiver near JPL that also can send up a "beacon" laser that gives the telescope on the spacecraft something to lock on to.

"NASA's optical communications demonstration has shown that it can transmit test data at a maximum rate of 267 megabits per second (Mbps) from the flight laser transceiver's near-infrared downlink laser -- a bit rate comparable to broadband internet download speeds."

"That was achieved on Dec. 11, 2023, when the experiment beamed a 15-second ultra-high-definition video to Earth from 19 million miles away (31 million kilometers, or about 80 times the Earth-Moon distance). The video, along with other test data, including digital versions of Arizona State University's Psyche Inspired artwork, had been loaded onto the flight laser transceiver before Psyche launched last year."

"Now that the spacecraft is more than seven times farther away, the rate at which it can send and receive data is reduced, as expected. During the April 8 test, the spacecraft transmitted test data at a maximum rate of 25 Mbps, which far surpasses the project's goal of proving at least 1 Mbps was possible at that distance." |

|

|

Astribot S1: Hello World! 1x speed. No teleoperation. Stacks and unstacks cups. Pulls a tablecloth out from under stacked wine glasses. Puts away items into drawers and containers. Slices vegetables. Flips pancakes. Takes caps off drinks. Pours drinks. Irons shirt. Folds shirt. Hammers stool. Waters plant. Vacuums. Shoots trash into a trash can like playing basketball. Draws calligraphy.

No idea how this robot works. This comes out of nowhere from the Astribot company that seems to have just come into existence in Shenzhen, China. |

|

|

Vidu is a Chinese video generation AI competitive with OpenAI's Sora, according to rumor (neither is available for the public to use). It's a collaboration between Tsinghua University in Beijing and a company called Shengshu Technology.

"Vidu is capable of producing 16-second clips at 1080p resolution -- Sora by comparison can generate 60-second videos. Vidu is based on a Universal Vision Transformer (U-ViT) architecture, which the company says allows it to simulate the real physical world with multi-camera view generation. This architecture was reportedly developed by the Shengshu Technology team in September 2022 and as such would predate the diffusion transformer (DiT) architecture used by Sora."

"According to the company, Vidu can generate videos with complex scenes adhering to real-world physics, such as realistic lighting and shadows, and detailed facial expressions. The model also demonstrates a rich imagination, creating non-existent, surreal content with depth and complexity. Vidu's multi-camera capabilities allows for the generation of dynamic shots, seamlessly transitioning between long shots, close-ups, and medium shots within a single scene."

"A side-by-side comparison with Sora reveals that the generated videos are not at Sora's level of realism." |

|

|

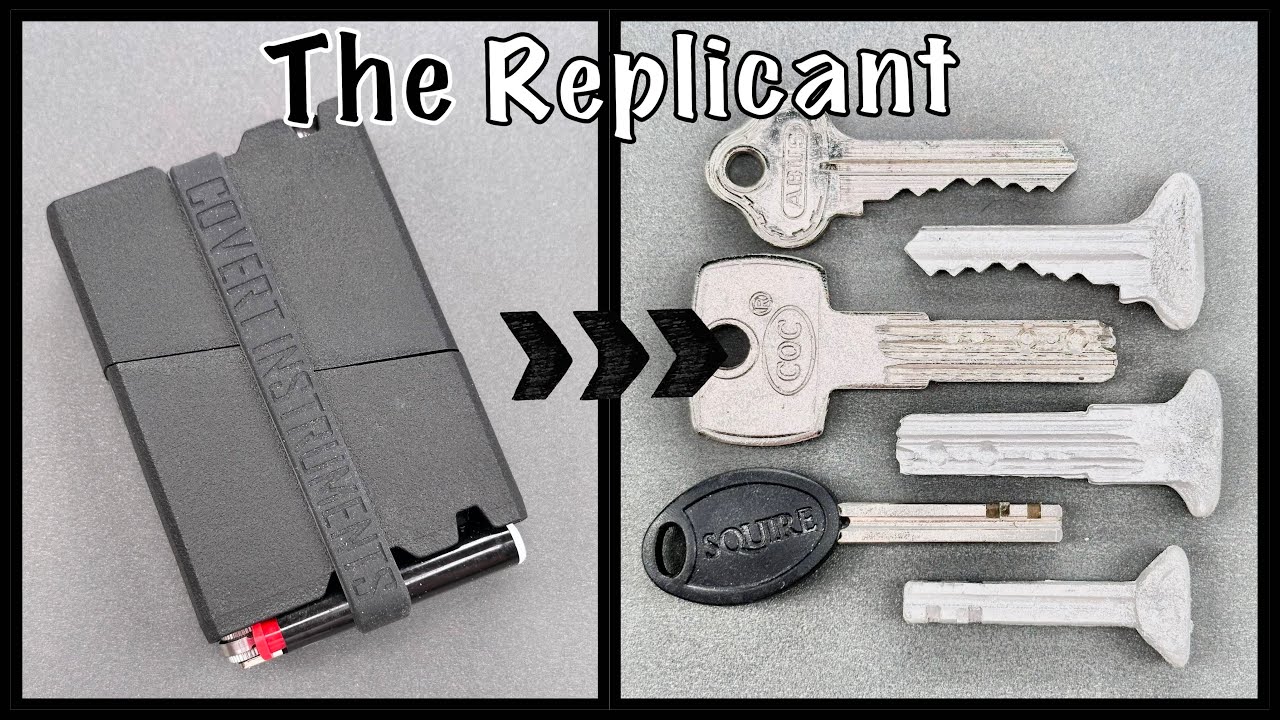

The Replicant is the world's fastest "key cast and mold kit". Which is another way of saying if you can get possession of a physical key for a few seconds (well, realistically, a few minutes), you can duplicate it with this kit.

You have to melt a metal ingot in a spoon with a cigarette lighter and pour the liquid metal into a key cast made by putting the key into a clamshell with polymer clay and squeezing it hard enough to make a cast of the key you are duplicating. |

|

|

The Standard Model of quantum physics. A look "under the hood".

By way of commentary: When I was in high school I was very frustrated by the fact that chemistry seemed like a random hodgepodge that didn't fit together in a nice, perfect, consistent manner like mathematics. What I didn't understand at the time, is that the true description of how chemistry works is quantum physics, and that everything in the chemistry books is just useful approximations of the results from quantum physics, that people have discovered over the centuries. I find now studying chemistry is much more worthwhile, now that I'm going into the subject with the mindset that what I'm learning is a collection of useful approximations of quantum physics, which is largely intractable.

On the subject of quantum physics, what most of us usually see those "Standard Model" particle tables that are done in the style of the periodic table of the elements from chemistry, but again we don't see the actual quantum physics equations behind it. Why doesn't anyone every show us the actual Standard Model equations? Physicists just seem to pull all kinds of principles and effects and assorted magic out of nowhere without showing us the Standard Model equations that it all comes from.

Well, first of all, it's "equation", singular -- just one -- and once you see it, you'll see why people haven't been showing it to you. It's really, really big, it's a differential equation (partial differential equation), which most people don't understand, but even if you do, it's hard to have any intuition as to how a differential equation behaves once it goes beyond 2 or 3 simple terms (at least that is the case for me), and it's full of greek letters and other funky mathematical notation.

And remember, the Standard Model is only half of reality (or what we know of it) -- the other half is general relativity, which isn't represented in this equation. |

|

|

World's largest 3D printer. The University of Maine smashed the former Guinness World Record held by... the University of Maine. That's right, the previous world record was held by a previous version of the same 3D printer. The new one is 4x larger.

"The new printer, dubbed Factory of the Future 1.0 (FoF 1.0), was unveiled on April 23 at the Advanced Structures and Composites Center (ASCC) to an audience that included representatives from the US Department of Defense, US Department of Energy, the Maine State Housing Authority, industry partners and other stakeholders who plan to utilize this technology. The thermoplastic polymer printer is designed to print objects as large as 96 feet long by 32 feet wide by 18 feet high, and can print up to 500 pounds per hour. It offers new opportunities for eco-friendly and cost-effective manufacturing for numerous industries, including national security, affordable housing, bridge construction, ocean and wind energy technologies and maritime vessel fabrication. The design and fabrication of this world-first printer and hybrid manufacturing system was made possible with support from the Office of the Secretary of Defense through the US Army Corps of Engineers."

"FoF 1.0 isn't merely a large-scale printer; it dynamically switches between various processes such as large-scale additive manufacturing, subtractive manufacturing, continuous tape layup and robotic arm operations. Access to it and MasterPrint, the ASCC's first world-record breaking 3D printer, will streamline manufacturing innovation research at the center. The two large printers can collaborate by sharing the same end-effectors or by working on the same part."

"Continuous tape layup" refers to how fiberglass and carbon fiber composite components can be manufactured by unrolling a "tape" "pre-impregnated with adhesive resin". |

|

|

X-Ray is a PDF file bad redaction detector. You can use it to remove redactions and reveal the original content -- if people do the redaction badly by drawing a black rectangle or a black highlight on top of black text.

But that's not the purpose of this tool. This tool isn't to help you, the PDF file reader -- it's to help the people who make the PDFs -- so they don't make mistakes and release badly redacted PDFs. If they use this tool, there will be nothing for you to reveal by using it. |

|

|

WebLlama is "building agents that can browse the web by following instructions and talking to you".

This is one of those things that, if I had time, would be fun to try out. You have to download the model from HuggingFace & run it on your machine.

"The goal of our project is to build effective human-centric agents for browsing the web. We don't want to replace users, but equip them with powerful assistants."

"We are build on top of cutting edge libraries for training Llama agents on web navigation tasks. We will provide training scripts, optimized configs, and instructions for training cutting-edge Llamas."

If it works, this technology has a serious possible practical benefit for people with vision impairment who want to browse the web. |

|

|

"Are large language models superhuman chemists?"

So what these researchers did was make a test -- a benchmark. They made a test of 7,059 chemistry questions, spanning the gamut of chemistry: computational chemistry, physical chemistry, materials science, macromolecular chemistry, electrochemistry, organic chemistry, general chemistry, analytical chemistry, chemical safety, and toxicology.

They recruited 41 chemistry experts to carefully validate their test.

They devised the test such that it could be evaluated in a completely automated manner. This meant relying on multiple-choice questions rather than open-ended questions more than they wanted to. The test has 6,202 multiple-choice questions and 857 open-ended questions (88% multiple-choice). The open-ended questions had to have parsers written to find numerical answers in the output in order to test them in an automated manner.

In addition, they ask the models to say how confident they are in their answers.

Before I tell you the ranking, the researchers write:

"On the one hand, our findings underline the impressive capabilities of LLMs in the chemical sciences: Leading models outperform domain experts in specific chemistry questions on many topics. On the other hand, there are still striking limitations. For very relevant topics the answers models provide are wrong. On top of that, many models are not able to reliably estimate their own limitations. Yet, the success of the models in our evaluations perhaps also reveals more about the limitations of the exams we use to evaluate models -- and chemistry -- than about the models themselves. For instance, while models perform well on many textbook questions, they struggle with questions that require some more reasoning. Given that the models outperformed the average human in our study, we need to rethink how we teach and examine chemistry. Critical reasoning is increasingly essential, and rote solving of problems or memorization of facts is a domain in which LLMs will continue to outperform humans."

"Our findings also highlight the nuanced trade-off between breadth and depth of evaluation frameworks. The analysis of model performance on different topics shows that models' performance varies widely across the subfields they are tested on. However, even within a topic, the performance of models can vary widely depending on the type of question and the reasoning required to answer it."

And with that, I'll tell you the rankings. You can log in to their website at ChemBench.org and see the leaderboard any time for the latest rankings. At this moment I am seeing:

gpt-4: 0.48

claude2: 0.29

GPT-3.5-Turbo: 0.26

gemini-pro: 0.25

mistral_8x7b: 0.24

text-davinci-003: 0.18

Perplexity 7B Chat: 0.18

galactica_120b: 0.15

Perplexity 7B online: 0.1

fb-llama-70b-chat: 0.05

The numbers that follow the model name are the score on the benchmark (higher is better). You'll notice there appears to be a gap between GPT-4 and Claude 2. One interesting thing about the leaderboard is you can show humans and AI models on the same leaderboard. When you do this, the top human has a score of 0.51 and beats GPT-4, then you get GPT-4, then you get a whole bunch of humans in between GPT-4 and Claude 2. So it appears that that gap is real. However, Claude 2 isn't the latest version of Claude. Since the evaluation, Claude 3 has come out, so maybe sometime in the upcoming months we'll see the leaderboard revised and see where Claude 3 comes in. |

|

|

FutureSearch.AI lets you ask a language model questions about the future.

"What will happen to TikTok after Congress passed a bill on April 24, 2024 requiring it to delist or divest its US operations?"

"Will the US Department of Justice impose behavioral remedies on Apple for violation of antitrust law?"

"Will the US Supreme Court grant Trump immunity from prosecution in the 2024 Supreme Court Case: Trump v. United States?"

"Will the lawsuit brought against OpenAI by the New York Times result in OpenAI being allowed to continue using NYT data?"

"Will the US Supreme Court uphold emergency abortion care protections in the 2024 Supreme Court Case: Moyle v. United States?"

How does it work?

They say rather than asking a large language model a question in a 1-shot manner, they guide it through 6 steps for reasoning through hard questions. The 6 steps are:

1. "What is a basic summary of this situation?"

2. "Who are the important people involved, and what are their dispositions?"

3. "What are the key facets of the situation that will influence the outcome?"

4. "For each key facet, what's a simple model of the distribution of outcomes from past instances that share that facet?"

5. "How do I weigh the conflicting results of the models?"

6. "What's unique about this situation to adjust for in my final answer?"

See below for a discussion of two other approaches that claim similar prediction quality. |

|

|

MyBestAITool: "The Best AI Tools Directory in 2024".

"Ranked by monthly visits as of April 2024".

"AI Chatbot": ChatGPT, Google Gemini, Claude AI, Poe.

"AI Search Engine": Perplexity AI, You, Phind, metaso.

"AI Photo & Image Generator": Leonardo, Midjourney, Fotor, Yodayo.

"AI Character": CharacterAI, JanitorAI, CrushonAI, SpicyChat AI.

"AI Writing Assistants": Grammarly, LanguageTool, Smodin, Obsidian.

"AI Photo & Image Editor": Remove.bg, Fotor, Pixlr, PhotoRoom.

"AI Model Training & Deployment": civitai, Huggingface, Replicate, google AI.

"AI LLM App Build & RAG": LangChain, Coze, MyShell, Anakin.

"AI Image Enhancer": Cutout Pro, AI Image Upscaler, ZMO.AI, VanceAI.

"AI Video Generator": Runway, Vidnoz, HeyGen, Fliki.

"AI Video Editor": InVideo, Media io, Opus Clip, Filmora Wondershare.

"AI Music Generator": Suno, Moises App, Jammable, LANDR.

No Udio? Really? Maybe it'll show up on next month's stats.

"AI 3D Model Generator": Luma AI, Recraft, Deepmotion, Meshy.

"AI Presentation Generator": Prezi AI, Gamma, Tome, Pitch.com.

"AI Design Assistant": Firefly Adobe, What font is, Hotpot, Vectorizer.

"AI Copywriting Tool": Simplified, Copy.ai, Jasper.ai, TextCortex.

"AI Story Writing": NovelAI, AI Novellist, Dreampress AI, Artflow.

"AI Paraphraser": QuillBot, StealthWriter, Paraphraser, Linguix.

"AI SEO Assistant": vidIQ, Writesonic, Content At Scale, AISEO.

"AI Email Assistant": Klaviyo, Instantly, Superhuman, Shortwave.

"AI Summarizer": Glarity, Eightify, Tactiq, Summarize Tech.

"AI Prompt Tool": FlowGPT, Lexica, PromptHero, AIPRM.

"AI PDF": ChatPDF, Scispace, UPDF, Ask Your PDF.

"AI Meeting Assistant": Otter, Notta, Fireflies, Transkriptor.

"AI Customer Service Assistant": Fin by Intercom, Lyro, Sapling, ChatBot.

"AI Resume Builder": Resume Worded, Resume Builder, Rezi, Resume Trick.

"AI Speech Recognition": Adobe Podcast, Transkriptor, Voicemaker, Assemblyai.

"AI Website Builder": B12.io, Durable AI Site Builder, Studio Design, WebWave AI.

"AI Art Generator": Leonardo, Midjourney, PixAI Art, NightCafe.

"AI Developer Tools": Replit, Blackbox, Weights & Biases, Codeium.

"AI Code Assistant": Blackbox, Phind, Codeium, Tabnine.

"AI Detector Tool": Turnitin, GPTZero, ZeroGPT, Originality.

You can view full lists on all of these and there are even more if you go through the categories on the left side.

No idea where they get their data? I would guess Comscore but they don't say. |

|

|

"Xaira, an AI drug discovery startup, launches with a massive $1B, says it's 'ready' to start developing drugs."

$1 billion, holy moly, that's a lot.

"The advances in foundational models come from the University of Washington's Institute of Protein Design, run by David Baker, one of Xaira's co-founders. These models are similar to diffusion models that power image generators like OpenAI's DALL-E and Midjourney. But rather than creating art, Baker's models aim to design molecular structures that can be made in a three-dimensional, physical world." |

|

|

"In a series of painstakingly precise experiments, a team of researchers at MIT has demonstrated that heat isn't alone in causing water to evaporate. Light, striking the water's surface where air and water meet, can break water molecules away and float them into the air, causing evaporation in the absence of any source of heat."

"The effect is strongest when light hits the water surface at an angle of 45 degrees. It is also strongest with a certain type of polarization, called transverse magnetic polarization. And it peaks in green light -- which, oddly, is the color for which water is most transparent and thus interacts the least."

They go on to say:

"The astonishing new discovery could help explain mysterious measurements over the years of how sunlight affects clouds, and therefore affect calculations of the effects of climate change on cloud cover and precipitation. It could also lead to new ways of designing industrial processes such as solar-powered desalination or drying of materials."

Does this effect from barely-absorbed visible light really have enough energy for high-performance water desalination driven by solar energy? The paper is paywalled so I guess I'll have to take them at their word. |

|

|

"Installation Guide for DarkGPT Project".

"DarkGPT is an artificial intelligence assistant based on GPT-4-200K designed to perform queries on leaked databases. This guide will help you set up and run the project on your local environment."

Eeep. |

|